Bayes’ theorem implementation in python

Machine learning is a method of data analysis that automates analytical model building of data set. Using the implemented algorithms that iteratively learn from data, machine learning allows computers to find hidden insights without being explicitly programmed where to look. Naive bayes algorithm is one of the most popular machine learning technique. In this article we will look how to implement Naive bayes algorithm using python.

Before someone can understand Bayes’ theorem, they need to know a couple of related concepts first, namely, the idea of Conditional Probability, and Bayes’ Rule.

Conditional Probability is just What is the probability that something will happen, given that something else has already happened.

Let say we have a collection of people. Some of them are singers. They are either male or female. If we select a random sample, what is the probability that this person is a male? what is the probability that this person is a male and singer? Conditional Probability is the best option here. We can calculate probability like,

P(Singer & Male) = P(Male) x P(Singer / Male)

What is Bayes rule ?

We can simply define Bayes rule like this. Let A1, A2, … , An be a set of mutually exclusive events that together form the sample space S. Let B be any event from the same sample space, such that P(B) > 0. Then, P( Ak | B ) = P( Ak ∩ B ) / P( A1 ∩ B ) + P( A2 ∩ B ) + . . . + P( An ∩ B )

What is Bayes classifier ?

Naive Bayes classifiers are a family of simple probabilistic classifiers based on applying Bayes’ theorem with strong (naive) independence assumptions between the features in machine learning. Basically we can use above theories and equations for classification problem.

Bayes classifier implementation in python

Now we have to implement this great theorem in python. Fortunately we have amazing library called scikit-learn in python.In this example we are going to create some random points in three dimensional space. We classified these points onto RED and BLUE. Our task is classify new points in this three dimensional space into either BLUE or RED. Lets start with importing required modules.

import warnings

warnings.filterwarnings(‘ignore’)

import numpy as np

import matplotlib.pyplot as plt

from sklearn.naive_bayes import GaussianNB

from IPython.display import Image

Now we are going to create sample three dimensional data for training

x_blue = np.array([1,2,1,5,1.5,2.4,4.9,4.5])

y_blue = np.array([5,6.3,6.1,4,3.5,2,4.1,3])

z_blue = np.array([5,1.3,1.1,1,3.5,2,4.1,3])

x_red = np.array([5,7,7,8,5.5,6,6.1,7.7])

y_red = np.array([5,7.7,7,9,5,4,8.5,5.5])

z_red = np.array([5,6.7,7,9,1,4,6.5,5.5])

We have to format this data to train with sklearn

red_points = np.array(zip(x_red,y_red,z_red))

blue_points = np.array(zip(x_blue,y_blue,z_blue))

points = np.concatenate([red_points,blue_points])

output = np.concatenate([np.ones(x_red.size),np.zeros(x_blue.size)])

Now we want to classify following points

predictor = np.array([5.3,4.2,3.3])

We are going to apply Bays classification theorem

classifier = GaussianNB()

classifier.fit(points,output)

print classifier.predict([predictor])

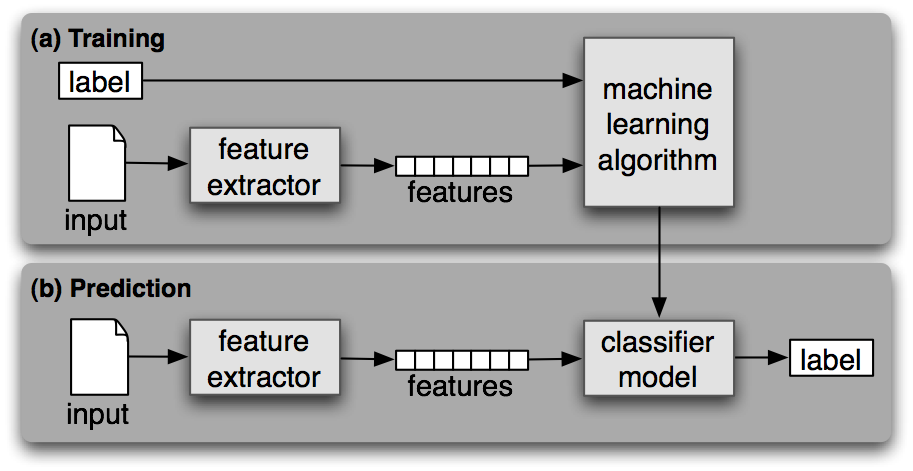

Lets move into more real world example. Suppose we have a list of name. We want to classify this names into Male and Female categories . Our classification process is as show in below.

Image(filename=‘classification.png’)

So first step is feature extraction. I am going to observe (extract) following evidance from a name last letter, last two letter and last_is_vowel

Next step is machine leraning algorithm. of cource we are going to use Naive Bayes classification.

Lets start implimentation in python. As it is more a NLP problem, We could use NLTK module from python. We have two csv files traing file names.txt and predict.txt which contains name to be predicted.

Lets import required module,

import numpy as np

import pandas as pd

import nltk

Define a function that parse csv file and return feature sets. We are using panda for parsing csv file.

def get_data(name, result=“gender”):

df = pd.read_csv(name)

df[‘last_letter’] = df.apply (lambda row: row[0][-1],axis=1)

df[‘last_two_letter’] = df.apply (lambda row: row[0][-2:],axis=1)

df[‘last_is_vowel’] = df.apply (lambda row: int(row[0][-1] in “aeiouy”),axis=1)

train = df.loc[:,[‘last_letter’,’last_two_letter’,’last_is_vowel’]]

train_dicts = train.T.to_dict().values()

genders = df.loc[:,[result]][result]

return [(train_dict, gender) for train_dict,gender in zip(train_dicts,genders)]

our names.txt is looks like,

df = pd.read_csv(“names.txt”)

print df

name gender

0 ebin M

1 leekas M

2 jinesh M

3 neethu F

4 mary F

5 neenu F

6 sanitha F

7 lekha F

df[‘last_letter’] = df.apply (lambda row: row[0][-1],axis=1)

df[‘last_two_letter’] = df.apply (lambda row: row[0][-2:],axis=1)

df[‘last_is_vowel’] = df.apply (lambda row: int(row[0][-1] in “aeiouy”),axis=1)

The extracted features are like

print df

| name | gender | last_letter | last_two_letter | last_is_vowel | |

| 0 | ebin | M | n | in | 0 |

| 1 | leekas | M | s | as | 0 |

| 2 | jinesh | M | h | sh | 0 |

| 3 | neethu | F | u | hu | 1 |

| 4 | mary | F | y | ry | 1 |

| 5 | neenu | F | u | nu | 1 |

| 6 | sanitha | F | a | ha | 1 |

| 7 | lekha | F | a | ha | 1 |

Now we want to train with data from names.txt

train_set = get_data(“names.txt”)

classifier = nltk.NaiveBayesClassifier.train(train_set)

Finally we want to test our model. We can use names from predict.txt file to test the created model

for name_and_feature in get_data(“predict.txt”,”name”):

print name_and_feature[1],“==”, classifier.classify(name_and_feature[0])

sukesh == M

jithil == M

sijith == M

maria == F

soumya == F

neethu == F