The Role of Cloud Data Lake ETL in Modern Data Architecture

Do you wonder about the truth behind the theory that Cloud Data Lake ETL plays a crucial role in modern data architecture? Well, you're in the right place.

In this short introduction, we will explore the importance of Cloud Data Lake ETL and how it fits into the overall data architecture landscape. If you are in a hurry and do not want to read through, reach out to our Cloud Service experts for consulting services for data warehouse solutions. They will help you construct adaptable centralized storage systems to facilitate data-driven decision-making.

By leveraging the power of the cloud, ETL (Extract, Transform, Load) processes can be seamlessly integrated into your data lake environment. This enables you to efficiently ingest, transform, and analyze large volumes of data from various sources.

With Cloud Data Lake ETL, you can unlock the true potential of your data and make informed business decisions.

So, let's dive in and discover the value that Cloud Data Lake ETL brings to modern data architecture.

Benefits of Cloud Data Lake ETL

What are the benefits of Cloud Data Lake ETL for you?

Cloud Data Lake ETL offers several advantages that can greatly benefit you and your organization. Firstly, it provides scalability and flexibility, allowing you to easily handle large volumes of data.

With Cloud Data Lake ETL, you can store and process data of any size, enabling you to efficiently manage and analyze massive datasets.

Additionally, it offers cost savings by eliminating the need for upfront hardware investments. By leveraging the cloud, you can avoid the expenses associated with maintaining on-premises infrastructure. Moreover,

Cloud Data Lake ETL enables faster data orchestration tool and analysis. With its distributed computing capabilities, you can parallelize your data processing tasks and significantly reduce processing times.

This empowers you to make quicker and more informed business decisions. Furthermore, Cloud Data Lake ETL facilitates data integration and collaboration. It allows you to easily ingest data from various sources and integrate it into a single, unified data lake. This promotes data sharing and collaboration across teams, enhancing overall productivity and efficiency.

In conclusion, Cloud Data Lake ETL offers scalability, cost savings, faster processing, and improved collaboration, making it a valuable tool for your data management and analysis needs.

Key Components of Cloud Data Lake ETL

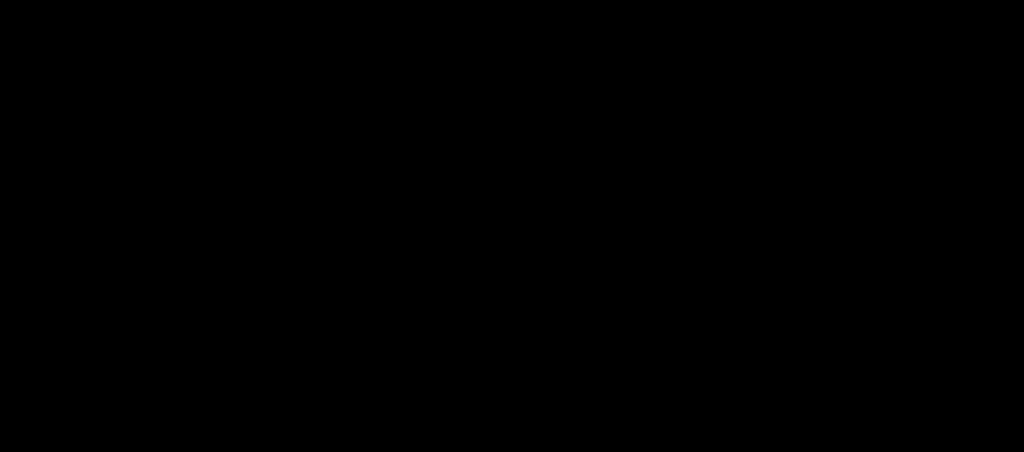

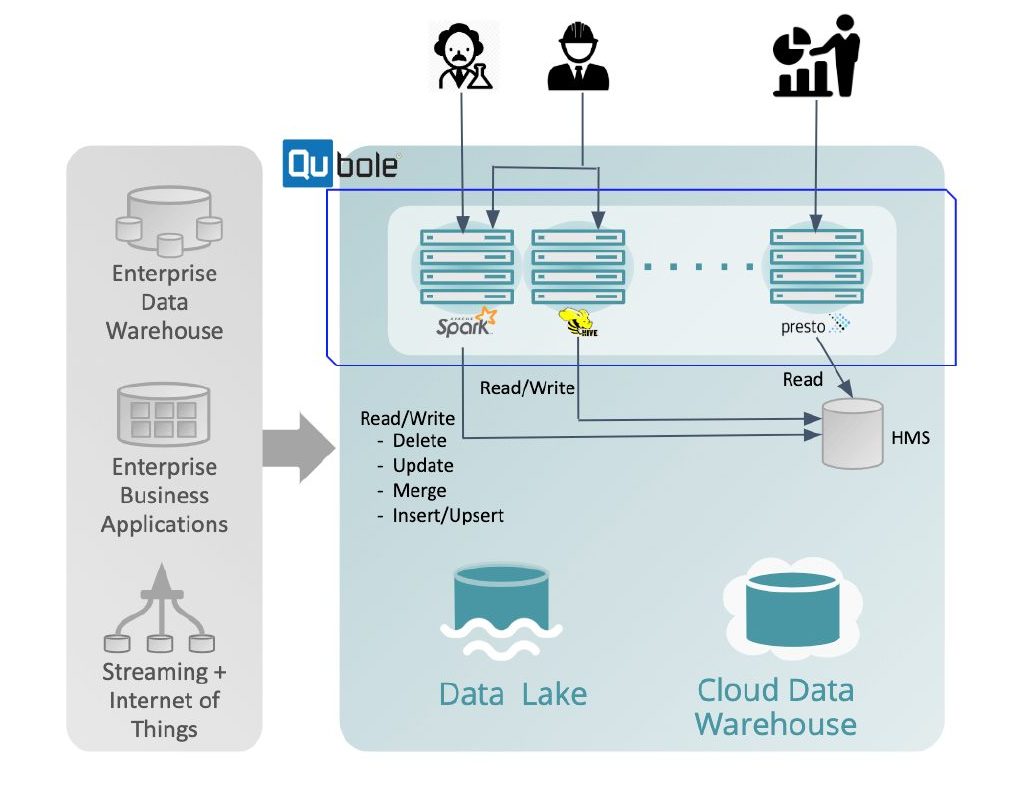

To effectively implement Cloud Data Lake ETL in your modern data architecture, it is essential to understand the key components involved. The first key component is the data source. This refers to the location where your data resides, whether it is a relational database, a file system, or an external API.

The second component is the data ingestion process. This involves extracting data from the source, transforming it into a suitable format, and loading it into the data lake.

The third component is the data catalog. A data catalog provides metadata information about the data stored in the data lake, such as its structure, schema, and lineage. It allows users to easily discover and access the data they need.

The fourth component is the data processing engine. This engine is responsible for executing the data transformations and analytics on the data lake. It provides capabilities like querying, aggregating, and joining data to derive insights and generate valuable reports.

Lastly, the data governance and security component ensures that the data in the data lake is protected, compliant with regulations, and accessible only to authorized users. By understanding and implementing these key components, you can successfully leverage the power of Cloud Data Lake ETL in your modern data architecture.

ETL Workflow in a Cloud Data Lake

You frequently execute the ETL workflow in a cloud data lake to efficiently transform and load data into your modern data architecture. The ETL workflow in a cloud data lake consists of several key steps that ensure the smooth flow of data from its raw form to its transformed and loaded state. These steps include:

Extraction: This is the process of extracting data from various sources such as databases, applications, or APIs. It involves gathering the necessary data and bringing it into the cloud data lake for further processing.

Transformation: Once the data is extracted, it needs to be transformed into a format that is suitable for analysis and storage. This step involves cleaning the data, structuring it, and applying any necessary business rules or calculations.

Loading: After the data has been transformed, it is loaded into the appropriate storage location within the cloud data lake. This step ensures that the data is organized and accessible for analysis and reporting.

Orchestration: The ETL workflow is orchestrated to ensure that the steps are executed in the correct order and at the right time. This involves scheduling the workflow, monitoring its progress, and handling any errors or exceptions that may occur.

Data Governance and Security in Cloud Data Lake ETL

To ensure the integrity and confidentiality of data in your cloud data lake ETL process, it is crucial to establish robust data governance and security measures. Data governance involves defining and implementing policies, processes, and procedures to manage and protect data throughout its lifecycle. With the increasing volume and complexity of data in cloud data lakes, effective data governance becomes even more critical.

One of the key aspects of data governance in cloud data lake ETL is access control. You need to ensure that only authorized individuals have access to the data. This can be achieved by implementing strong authentication mechanisms, such as multi-factor authentication, and role-based access control. Additionally, you should regularly review and audit user access to detect any unauthorized activities.

Data encryption is another essential security measure. By encrypting data at rest and in transit, you can protect it from unauthorized access. Encryption should be applied not only to sensitive data but also to metadata and log files.

To detect and prevent data breaches and unauthorized access attempts, you should implement robust monitoring and logging mechanisms. This includes monitoring user activities, system events, and data access patterns. By analyzing logs and using advanced analytics, you can identify any suspicious activities and take appropriate actions.

Regular backups and disaster recovery plans are crucial for data integrity and availability. By backing up your data and having a well-defined recovery plan, you can ensure that your data remains intact and accessible in the event of any unforeseen issues or disasters.

Integration of Cloud Data Lake ETL With Analytics Tools

Integrating cloud data lake ETL with analytics tools enhances data analysis capabilities. By combining the power of cloud data lakes with advanced analytics tools, organizations can unlock valuable insights from their data and make informed decisions. Here are two key benefits of integrating cloud data lake ETL with analytics tools:

- Simplified data processing: With the integration of ETL (Extract, Transform, Load) processes and analytics tools, organizations can streamline their data processing workflows. This allows for efficient data extraction, transformation, and loading into the analytics tools, enabling faster and more accurate analysis.

- Enhanced data visualization and reporting: Analytics tools provide powerful visualization and reporting capabilities that can help present data in a meaningful and easily understandable way. By integrating cloud data lake ETL with these tools, organizations can create interactive dashboards, charts, and reports that enable users to explore and comprehend data effortlessly.

Best Practices for Implementing Cloud Data Lake ETL

To achieve optimal results when implementing cloud data lake ETL, it is essential to follow best practices that maximize efficiency and accuracy in your data processing workflows. These best practices ensure that your ETL processes are streamlined, reliable, and scalable, allowing you to extract, transform, and load your data seamlessly into your cloud data lake.

First and foremost, you should design your ETL pipelines with modularity and reusability in mind. By breaking down your workflows into smaller, independent components, you can easily maintain and update them as your data and business requirements evolve.

Additionally, it is crucial to implement data quality checks at every stage of the ETL process. This includes validating data formats, performing integrity checks, and ensuring data consistency. By doing so, you can identify and resolve any issues early on, reducing the risk of inaccurate or incomplete data being loaded into your data lake.

Furthermore, implementing data lineage and metadata management practices is essential for data governance and compliance. By tracking the origin and transformations applied to your data, you can ensure data traceability and maintain a clear understanding of the data's context and history.

Lastly, leveraging automation tools and technologies can greatly enhance the efficiency of your ETL processes. Automating repetitive tasks, such as data ingestion, transformation, and scheduling, not only saves time but also reduces the likelihood of human errors.

Conclusion

In conclusion, the role of cloud data lake ETL in modern data architecture is crucial for businesses to efficiently extract, transform, and load data into their cloud data lakes. With its numerous benefits, including scalability, cost-effectiveness, and flexibility, cloud data lake ETL enables organizations to seamlessly integrate various data sources and ensure data governance and security.

By integrating cloud data lake ETL with analytics tools, businesses can derive valuable insights and make informed decisions, ultimately driving growth and success. Want to know more about seamless integration of Cloud Data Lake ETL for efficient and scalable data processing? Get in touch.