Choosing the Best Database for Optimal Performance – Part 1

Choosing a database is a simple thing when we are looking for a product that does not need large scaling. It could be an internal management system, a hyperlocal ecommerce model in a few kilometer areas, or an application with high per-customer returns. But, when planning to serve it across borders, we may need to think about how efficiently we can deliver our applications with the best user experience. Let’s discuss some prominent databases around us in this first part of the blog by calling it a ‘super introduction.’

So, this discussion is divided into two sections:

- Different Databases around us

- Things to know before we choose one

Before that, let’s put a question for the enthusiasts here:

Assume you are a developer at LinkedIn, (for the sake of understanding) and you are assigned to share a Tim Cook post with all his 3rd and 4th-degree connections.

What will be your “database”, “data structure” and “query” to find those users? - This is the problem we are trying to tackle here.

How It Is About…

As developers, what is our core responsibility?

To manage the soft copy of data, right? Does someone have any other opinion (I respect that as well)? Automating your business, creating tech solutions for your problem, etc. provides a potential growth of handling 50 users per month using Excel sheets by you to 500K per day or more with your staff. So, basically, we automate the point of entry of raw data with engaging UI & UX and forms, store them in the database, calculate what we want, and present what we need. What do developers manage here? It’s the raw form of data. You may recall a person's photo or name by looking at the Uber driver's location from where the customer stands. Or even more complex, we can see the street view and go to the business just by looking at Google Maps.

Understanding Data Types

When the world is running, our big three headaches start with v's. They are volume, velocity, and variety of data. So to control them, we don’t have a magic spell like Harry Potter uses.

So we need engineering and better storage for easy data retrieval using expert cloud services. The primary step will be classifying and storing different data types into various formats, where we can perform operations on them. When we need to operate it efficiently, we need them to be in an optimal structure where we can use it, like just storing a yes or no, for the question, “Does this user have admin access?”, or a date with which I can bring, “Get me all users who have a purchase this month.”

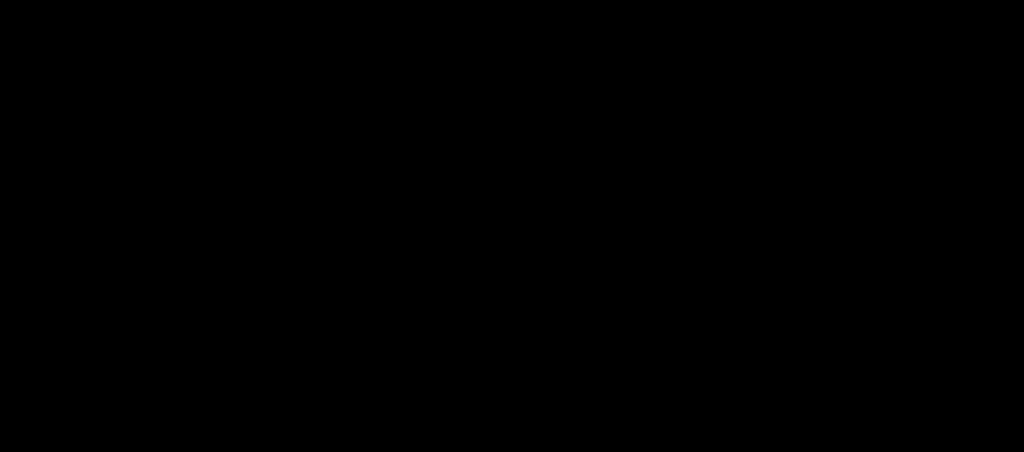

Just go through these terms, in case anything is confusing, just Google it as “Explain Data Type: UUID”. Yet these are just some of the underrated data types. You can explore more using ChatGPT, Copilot, or Claude.

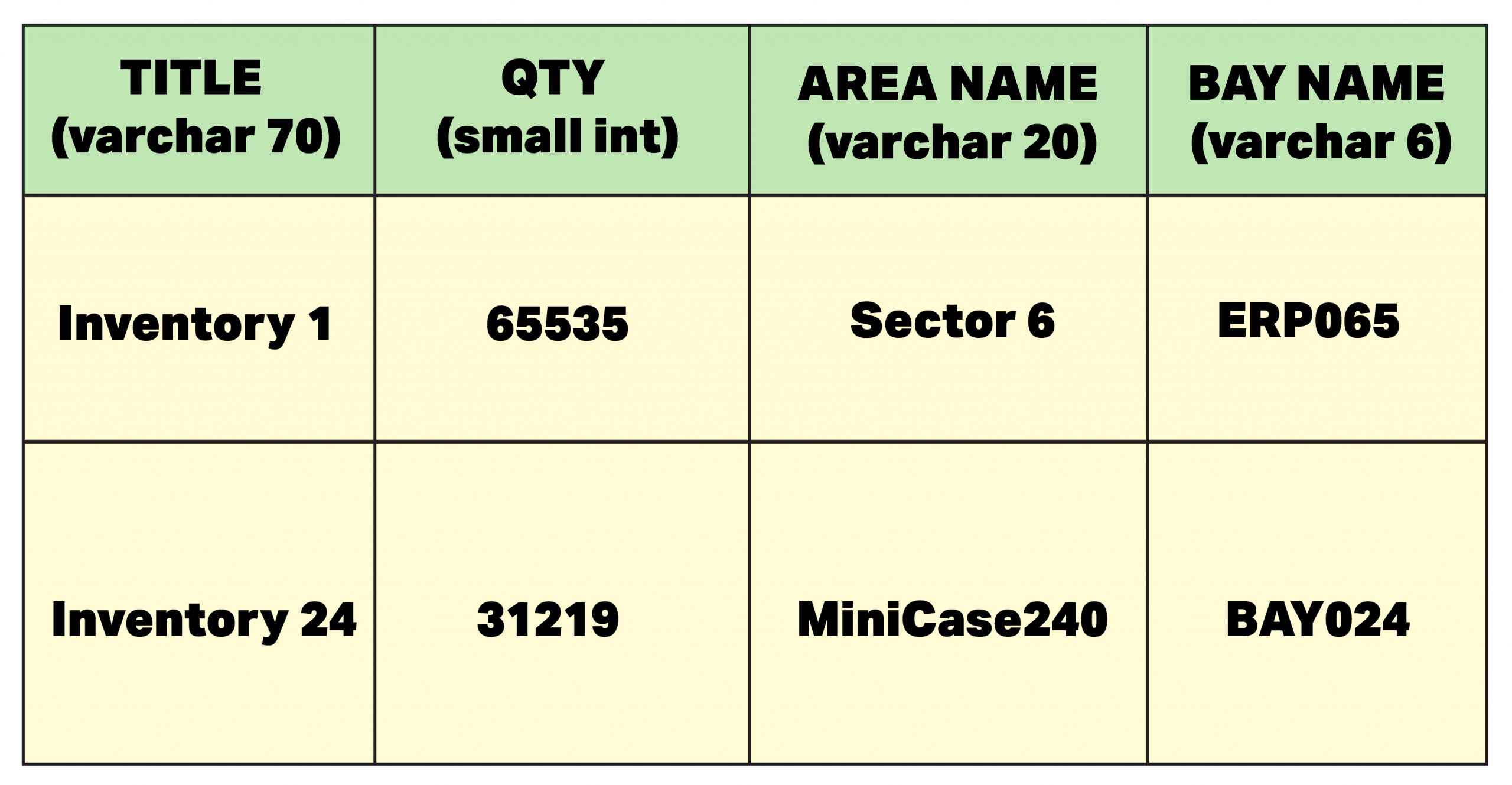

When planning to store data as tables, such as an Excel sheet, we call it a relational database.

Everyone might be aware of the term “Relational Database”. For those unfamiliar, here is a simplified definition:

- This is an application, something like Photoshop or Google Chrome, but its primary purpose is to get connected to other programs, to store, modify, and retrieve data efficiently.

You can see a sample table above and a CSV on the right. We can imagine the data we insert into the database to be stored in such a manner.

First Imagination

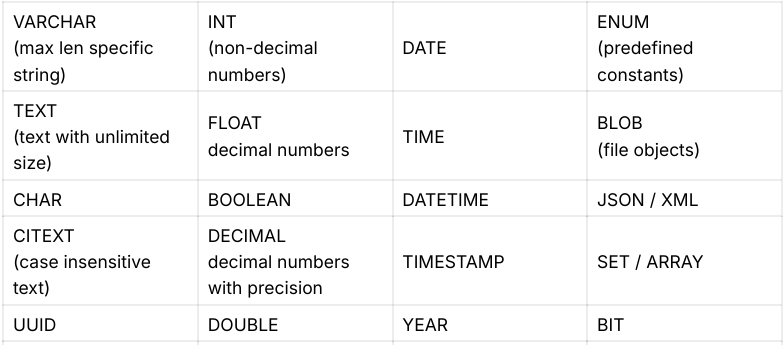

So, for those who already have an idea, have you ever wondered, how these datasets have been stored in db? This is beyond our scope, however, imagine on one line for the left dataset, we have a table like this:

Net it will take around 100 chars of space for each row. This leads to storing data like:

The first row starts at, say, 2000, then the next record will be at 2100, and the next at 2200.

Using this structure, we can assure, at any row, the area name starts at the location will be = (row starting + 74) and the ending location will be (row starting + 94)

This is not how it works, but it is a great place to start for an in-depth and simple breakdown.

So, regardless of whether data is there or not, the space is kept reserved to access data with some simple calculations; if we know the starting address of the table, and size of any row, and the delta of space for each column. From the row:

Now you can think of SELECT * FROM table WHERE areaname="Warehouse"; to be executed over your custom script.

An Ultimate Solution for All Your Problems?

Regarding most common databases, relational databases are the most popular and widely used due to their community support and available tutorials. This is the most common one beginners prefer. Most likely you will hear MySQL, PostgreSQL, MariaDB, Microsoft SQL Server, and Oracle Database.

While starting to implement our concept, the first thing to analyze will be: what data do we want to operate? followed by entry points, and display points of these data.

Problem 1 - Implementation of Google Map Live Tracking

Being a bit odd, let’s take a complex example of Google Maps’s Live Traffic information. The ultimate agenda is to display or (share as API) the live information of a road user intended to travel. The planned output shows the road as Blue, Yellow, or Red, from Location A to Location B. How are these data collected?

They know there is no one to monitor all these details, summarize, and upload. They need to determine the number of vehicles present and want to use the presence of mobile phones rather than vehicles. They could successfully send the location of each mobile phone to one of their servers. From there, they run another service to group information mobiles with similar movement behavior that can be clustered into single vehicles, say, 50 mobiles can be classified as a bus, 5-7 as a van or car, 1, 2 or 3 mobiles can be a bike or rickshaw (maybe a classifier ML model made with seasoned AI development services will help to identify that). This service can act as the above-discussed “summarizer”. This can be stored in a temporary but highly available data storage. A provisioner can manage the publishing of sudden traffic surges to all listening maps that are already in motion.

Do we need a highly persistent, low-write-capable database here? Since we are only storing them temporarily, but with high write volume, missing some data will not affect it much. This can be leveraged for performance or cost optimization. What if we had a key value in the memory application that could be stored in RAM, maintaining excellent performance? Someone struck by Redis at this point, another with Memcached, or another with something else.

Problem 2 - Stateful Services like Uber, Swiggy

A similar situation can be Uber or Swiggy tracking the location of the driver and serving this information to nearby customers. Here gig workers willingly share their location on a public platform so that customers can track their order almost in real-time. We don’t want to store where Driver X was in 2021, Jan 25th at 3:00 PM. We need to track this only live, the very next second or the next hour. The validity of data is expired or becomes useless. So, is the above explanation valid here?

Problem 3 - Timeline Creation in X (formerly “Twitter”)

Thinking of an entirely different problem, in your X account, you may be following 1000 people, or someone may be following 8K “active” people. How will you build your relational database query to construct your timeline?

Assume relational tables like this:

--- user table ---

TABLE USER (

id INTEGER AUTOINCREMENT PRIMARY KEY,

username VARCHAR(100),

);

--- followers table ---

TABLE FOLLOWERS (

user FOREIGN KEY(USER),

friend FOREIGN KEY(USER),

)

--- tweet table ---

TABLE TWEET (

author FOREIGN KEY(USER),

content TEXT,

posted_at DATETIME,

)

Just “assume” some of the stats of X, to have a read of 300K TPS (Tweets per second) and a write of 6K TPS. From this, it is clear that it will not be an intelligent design to perform a 300K query to construct a timeline each time the user asks for his timeline.

Let me try a basic query.

-- assuming:current_user_id as logged-in user's id

SELECT t.id, t.author, u.username, t.content, t.posted_at

FROM TWEET t

JOIN USER u ON t.author = u.id

WHERE t.author IN (

SELECT friend

FROM FOLLOWERS

WHERE user = :current_user_id

UNION

SELECT:current_user_id

)

ORDER BY t.posted_at DESC

LIMIT 20 OFFSET 0;

Let me try a different approach,

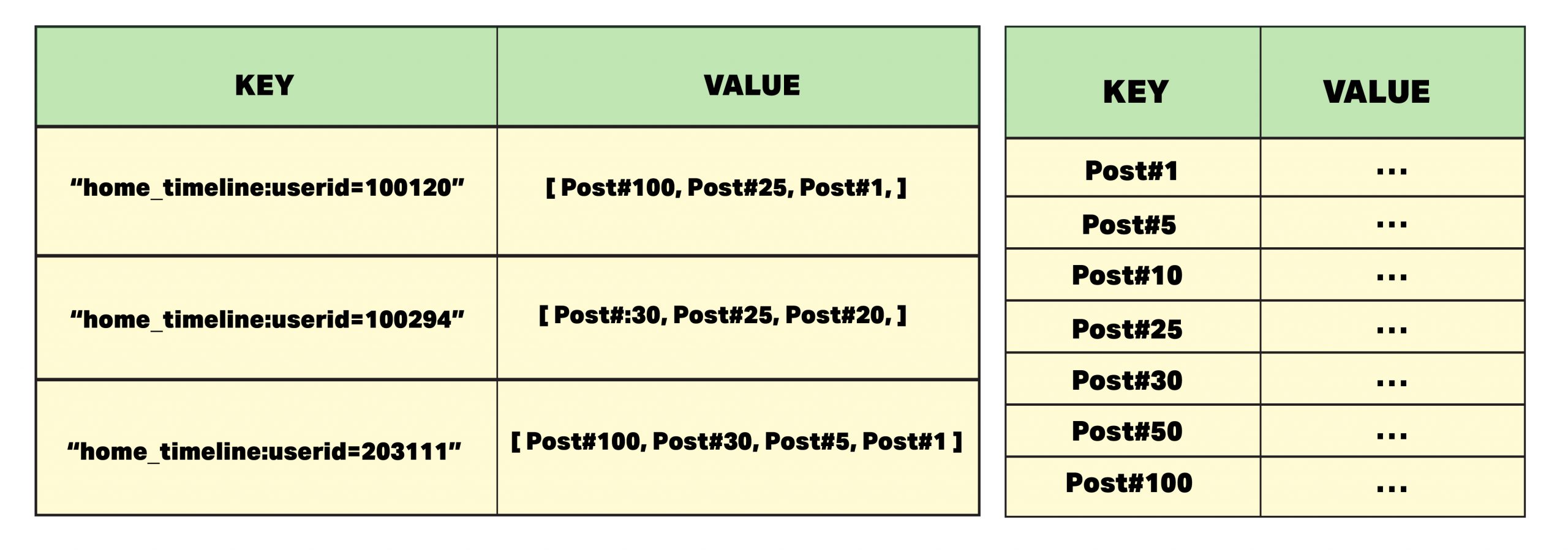

What if I can construct the user timeline at the time of post creation by a friend?

The same mechanism can play a vital role more efficiently than traditional thought of relational database service. Fetch the data against a key and perform a simple return - that’s it. This scenario is safe with some document databases or key-value databases like Apache DynamoDB, Hadoop-HBase, Redis, or MongoDB. The main power of key-value and document databases is its ease of horizontal scaling, as dependencies to other rows are more logical than enforced ones. So, commonly they will have a write-intrinsic nature.

Problem 4 - LinkedIn’s Second & Third Connections

Coming back to the first question on the top,

Assume you are a developer at LinkedIn, and you are assigned to share a user’s post to all his 3rd and 4th-degree connections.

What if we need to share the post with the first, second, and third connections? What if our marketing team asked to share it with one more layer of connections to achieve the financial target?

You may need to join tables recursively with a drastic performance variation.

Let me think of this from a different perspective:

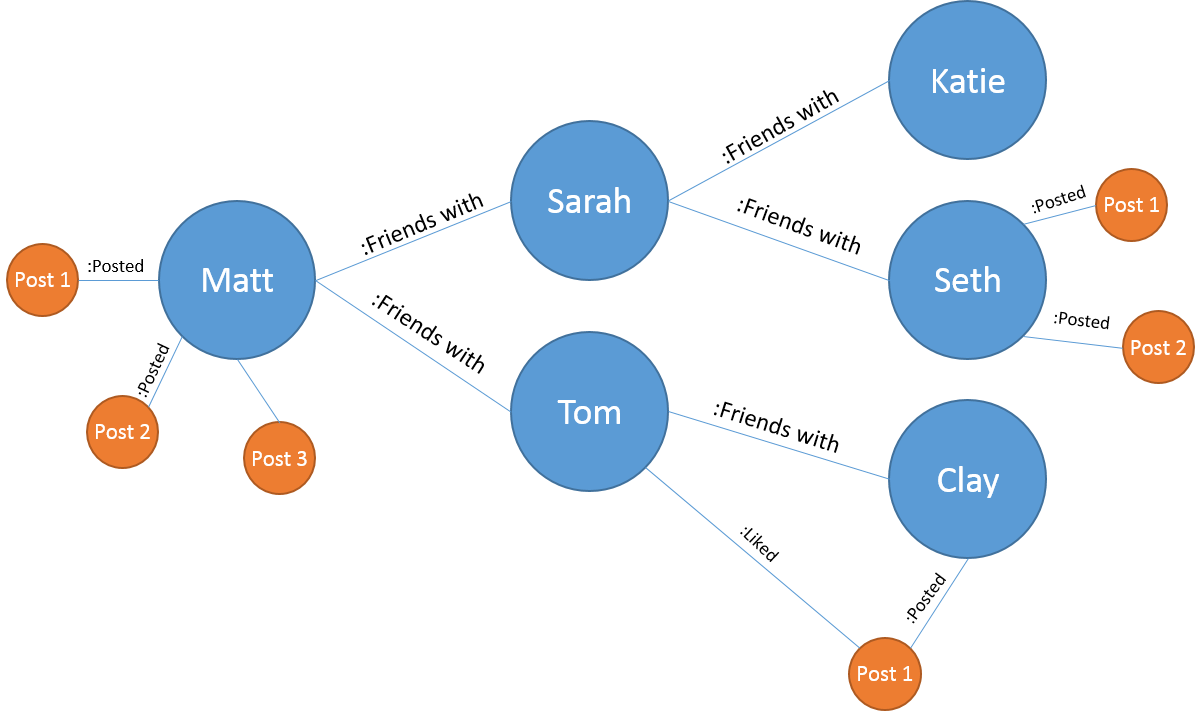

Source: Medium

What if we can store this information in such a connected node with persons as nodes and create connections between nodes (edges) when someone connects? As you can imagine, we can easily find the 4 degrees with less computation.

MATCH User( $current_user_id ):FRIEND_OF[*3..4];

We found another treasure now, which has the potential of mapping relations. Many databases like Neo4J, Amazon Neptune, and many others provide an efficient way to facilitate graph-based relations.

Problem 5 - Flipkart’s Search Engines and Quick Analytics

Flipkart had several fascinating features a few years ago, including hardcore research on UX. Even switching the color of the “Buy Now” button to bright orange and the “Add to Cart” button to white contributed to a huge jump in ROI.

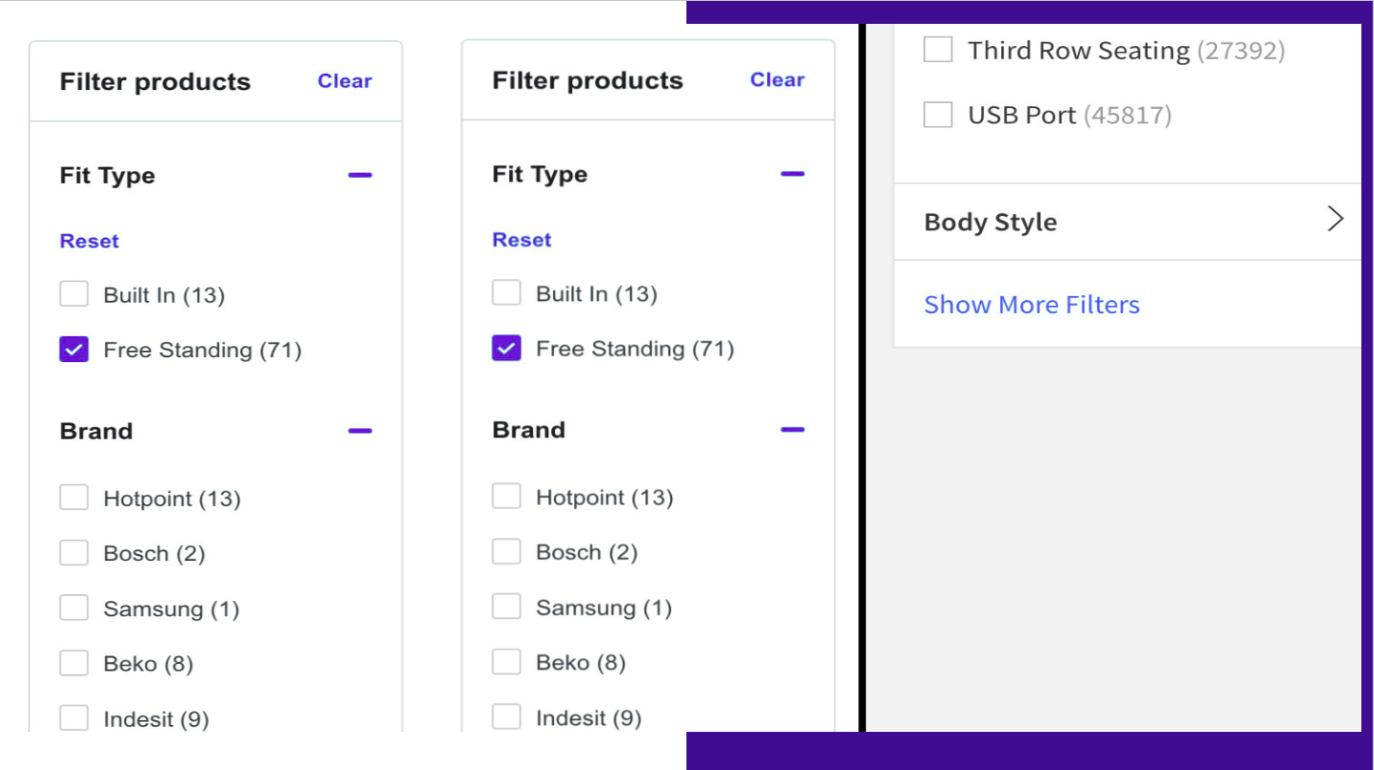

Coming back to our topic, Flipkart was providing the sample product count with the current selection over the dynamic filter. Users may play with this a dozen times before the actual submission of the “Apply Filter”.

Source: WebArchive

Seems interesting? The relational databases can be much more expensive and may have O(n) complexity. For platforms like Flipkart which focuses on high on-screen presence with 100x more read than write-to-catalog data, an in-memory storage can provide a huge heads up than In-Disk persistence storages. Solutions like “ElasticSearch” or “Apache Solr” can align with the idea to provide more powerful responses.

We may have time series data entries like Prometheus DB or InfluxDB - prominent ones used to store log-based information. Some others plan to execute over a distributed environment, such as Kafka (not a DB, but still stores data as logs), or HBase (Document DB).

Conclusion

Do not jump to the conclusion that RDBMS is a worthless solution. It is powerful for its use cases and can power up the whole world. Still, the right tool at the right time generates better ROIs, and saves time, and costs. Unfortunately, there is no ultimate solution to all our problems. But we can get one tailored to our specific needs with the help of a capable custom software development company. So, now that we have a solid preliminary idea around the topic, stay tuned for more context in the next part of this blog.