Choosing the Best Database for Optimal Performance – Part 2

Choosing the best database requires researching core strategies and behaviors that contribute to maximizing its performance. You will also need to journey deeper into sophisticated techniques, tools, and best practices to enhance the efficiency and scalability of your database systems.

I am following up on where we left off in our 'super introduction'. There were two major sections in the previous part of the blog:

- Different Databases around us, and

- Things to know before we choose one

So, this final section will go over 'some scribbles on scalability and performance'.

Let us look at what will help us reach peak database performance, whether managing large datasets, fine-tuning queries, or incorporating AI-driven insights.

Let's begin.

A deep understanding of the system is important. It is challenging to stay focused on the end product when using agile, yet we know the core problems.

Here are some thoughts from a single-point perspective, What are the demands for choosing the 'right' database? You might know all the theoretical solutions by now, like ACID Compliance, Normalization, Denormalization, and others.

Wait, Denormalization? Yes, you read it right. Sometimes we need to denormalize standards for better performance. There was a common grandpa tale about the Instagram database. Whenever Justin Beiber posts something to Instagram, the Instagram MYSQL Server goes down. On exploring executed queries, the developers found that the count of likes for the post is getting executed at a huge rate. They have to forget the normalization classes and store the number of likes in an additional column. The error that can happen on additional write hits is so inexpensive when compared to the expense of calculating the count of likes at each read.

Anyway, for more theoretical chapters, you can Google or ask ChatGPT for some “Key Factors to Consider When Choosing a Database” as they sound more theoretical.

So, let’s shift our focus to some practical scenarios.

Understanding of Ongoing Technologies

Knowledge about different services is the very first thing to choose to understand what becomes the best fit or at least what should be searched for. A clear understanding of the requirement is the next thing to be focused on to bring the best ROI for your cloud-based solutions.

Nature of Storage and Querying

What comes to your mind when you hear a problem statement? Does that sound like an aggregator that counts the number of devices at a time, or more like related datasets, like keeping a ledger of cash in a wallet? Or something more complex as we previously discussed, to retain a state like online status and location of Uber drivers?

These examples shape the data across our mobile applications or IoT devices to servers and end as analytics or reports. We have to define the idea of input structure, output structure, storage information, its validity, and the responsible person for an entity.

Required Persistency

It is good to have persistence of data. But what if we can compromise it for performance or cost cutting when needed? It is even better. Consider a case of sharing usage logs from IoT solutions. There is no need to store them as a whole, instead, we can aggregate them and store only what we need for the time being or permanently.

To crack our own custom software solution, we have In-Memory, Persistent, and Distributed storage. Previously, we have looked at some entry-level case studies and the need to choose the right databases. This can empower you to understand and shape your inputs, outputs, and the structure needed in storage.

Supporting Features and Required Performance

- Many databases provide solutions for handling geospatial data, document contents, text search, and plugin facilities. Some are kept to be light.

- Some focus on high performance even on millions of data while others start from billions of records. We classify them with respect to their Storage Capacity.

- Heavy read requires a read-intrinsic database, while others may develop themselves for heavy write loads. Their throughput, and latency matter in those cases.

Scaling

Scaling can be done in different ways.

Logical partitions

Logical partitions are data partitioned based on factors like country or time. For instance, the orders of all users from a specific country will be logically partitioned into a specific table and handled accordingly. Another example is storing data for each year in one table.

We know some of the data like orders or logs get accumulated over time. After a time, these may not be frequently used. It is a rare event and it is okay to face a delay in accessing old data. So, after a time, these can be stored in large storage as we may need limited operations there. Companies like Flipkart store the latest order data in highly available databases such as MySQL (say, the orders of the past year) and archive them to databases like Apache Cassandra. Cassandra is excellent for write operations but not so fast for read operations. Still, It is okay for Flipkart to compromise in performance for large-volume storage and better performance of recent data by clearing earlier ones.

Vertical partitioning

Vertical partitioning is when the table is split into columns with different columns stored on various tables. For example, consider an event booking platform, which has continuously been developed for the last two decades, and may prune to accumulate a large number of columns in the “event” table. This itself reflects in the performance. We have to separate our columns into multiple tables in such cases.

Data Replication

When we need to serve more requests than a single database system can handle, we may have to copy the data into multiple databases, sharing the same structure, usually controlled at the application level.

Horizontal Partitioning

When we feel the performance decreases due to the volume of rows, We may have to partition the data into multiple tables or shards or to other databases, sharing the same copy of data, and syncing across. When we split them into multiple databases, which follow the same schema, this is known as Horizontal Partitioning. This is also usually load-balanced and controlled by a master DB. Non-relational data can be easily scaled horizontally.

ReadOnly Replica

When a column in a row needs to get READ, the database usually places a write-lock on the column to prevent updating that cell by others. Similarly to perform a WRITE, the database places a read-write-lock to prevent any action on this for other requests. This can raise too many deadlock situations, especially when we have the usual Master Slave Replicas.

When our application has few writes and heavy read spikes, we can usually create read-only copies (replicas) of a master database. The write will be only to the master database and sync will be done underground. This keeps the atomicity of transactions with very few write-based locks, allowing more reads with fewer deadlock-raising situations.

Caching

Databases like PostgreSQL give the feature of caching responses which is beneficial for repeated query calls. The internal caching mechanism properly balances the cache eviction as well as optimal CPU usage.

Database Indexing

Indexing is the master of double-edged swords in the database world. When used correctly, it significantly speeds up queries, but improper use can lead to inefficiencies. Indexing creates a copy of selected columns and points to the corresponding rows, allowing faster lookups. Without indexing, databases perform linear searches with O(n) complexity. With indexing (often using a B+ tree), searches are reduced to O(log n), meaning for 10,000 rows, the search depth is only 4.

But everything comes with its drawbacks. Indexing consumes additional storage and needs to reindex every update. Indexing will not work on non-exact searches like searching with %LIKE%, or when searching or filtering with other combinations. Assume we have an indexed username field for the user's table; this will work only for the querySELECT * FROM users where username="alice149";

A query like SELECT * FROM users WHERE username="alice149" AND is_active=true;

or SELECT * FROM users WHERE username LIKE "alice%"

will never utilize this index. To get the first one indexed, you have to create something like CREATE INDEX idx_username_is_active ON users(username, is_active);

Creating blindfold indexes can lead to unnecessary overhead, as they may rarely be used but still require maintenance. Proper indexing should be done after profiling queries that frequently hit the database over a period of time.

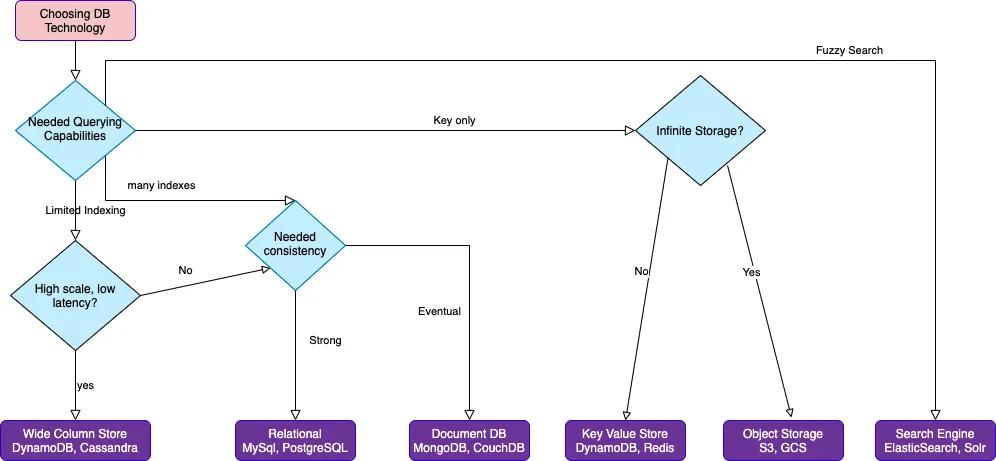

The following flowchart covers a brief about how you can choose the category of the database:

Source: Medium

A Quick Analysis of Performance of Different Operators

1. IN vs EXISTS

Complexity: IN can be inefficient when used with subqueries, especially if the subquery returns a large dataset.

Alternative: Use EXISTS for better performance, especially when we just want to check the presence.

Example:

-- Using IN

SELECT username FROM users WHERE user_id IN (SELECT user_id FROM orders WHERE order_date > '2024-01-01');

-- Using EXISTS (better performance)

SELECT username FROM users u WHERE EXISTS (

SELECT 1 FROM orders o WHERE o.user_id = u.user_id AND o.order_date > '2024-01-01'

);

2. JOIN vs SUBQUERY

Complexity: Subqueries can lead to inefficient execution plans.

Alternative: Use JOIN to improve performance when possible.

Example:

-- Using Subquery

SELECT username FROM users WHERE user_id = (SELECT user_id FROM orders WHERE order_id = 123);

-- Using JOIN (better performance)

SELECT u.username FROM users u JOIN orders o ON u.user_id = o.user_id WHERE o.order_id = 123;

3. LIKE vs Full-Text Search

Complexity: LIKE '%term%' is slow because it can’t use an index efficiently.

Alternative: Use full-text search for faster matching.

Example:

-- Using LIKE

SELECT * FROM articles WHERE content LIKE '%search_term%';

-- Using Full-Text Search (better performance)

SELECT * FROM articles WHERE MATCH(content) AGAINST('search_term');

4. UNION vs UNION ALL

Complexity: UNION removes duplicates, which can be costly.

Alternative: Use UNION ALL if you don’t need to remove duplicates.

Example:

-- Using UNION

SELECT username FROM users_2023

UNION

SELECT username FROM users_2024;

-- Using UNION ALL (better performance)

SELECT username FROM users_2023

UNION ALL

SELECT username FROM users_2024;

5. COUNT(*) vs COUNT(column_name)

Complexity: COUNT(*) can be more efficient, especially if there are no NULL values.

Alternative: Use COUNT(column_name) to count only non-null values.

Example:

-- Using COUNT(*)

SELECT COUNT(*) FROM users;

-- Using COUNT(column_name) (only non-null values)

SELECT COUNT(email) FROM users;

These alternatives can lead to significant performance improvements, especially with large datasets.

6. DISTINCT vs GROUP BY

Complexity: DISTINCT can be inefficient if used to remove duplicates.

Alternative: Use GROUP BY if you’re also aggregating data.

Example:

-- Using DISTINCT

SELECT DISTINCT user_id FROM orders;

-- Using GROUP BY (better when also aggregating)

SELECT user_id, COUNT(*) FROM orders GROUP BY user_id;

7. OR vs UNION

Complexity: Using OR can lead to inefficient query execution, especially with multiple conditions.

Alternative: Use UNION to split the query into separate parts.

Example:

-- Using OR

SELECT * FROM users WHERE city = 'Kochi' OR city = 'Bangalore';

-- Using UNION (better performance)

SELECT * FROM users WHERE city = 'Kochi'

UNION

SELECT * FROM users WHERE city = 'Bangalore';

8. LEFT JOIN vs INNER JOIN

Complexity: LEFT JOIN returns all rows from the left table, even if there’s no match in the right table, which can be slower.

Alternative: Use INNER JOIN if you only need matching rows.

Example:

-- Using LEFT JOIN

SELECT u.username, o.order_id FROM users u LEFT JOIN orders o ON u.user_id = o.user_id;

-- Using INNER JOIN (better performance when non-matching rows aren't needed)

SELECT u.username, o.order_id FROM users u INNER JOIN orders o ON u.user_id = o.user_id;

9. HAVING vs WHERE

Complexity: HAVING is generally used for filtering after aggregation, which can be less efficient.

Alternative: Use WHERE for filtering before aggregation when possible.

Example:

-- Using HAVING

SELECT user_id, COUNT(*) FROM orders GROUP BY user_id HAVING COUNT(*) > 5;

-- Using WHERE (better when filtering before aggregation)

SELECT user_id FROM orders WHERE order_date > '2024-01-01' GROUP BY user_id HAVING COUNT(*) > 5;

10. ORDER BY with LIMIT vs INDEX

Complexity: Sorting with ORDER BY and LIMIT can be slow without an appropriate index.

Alternative: Create an index to optimize the sort operation.

Example:

-- Using ORDER BY with LIMIT

SELECT username FROM users ORDER BY created_at DESC LIMIT 10;

-- Using an Index (create an index to speed up sorting)

CREATE INDEX idx_created_at ON users(created_at DESC);

SELECT username FROM users ORDER BY created_at DESC LIMIT 10;

These optimizations can lead to significant performance gains, especially when dealing with large datasets or complex queries. You can find more of them yourself using AI Tools or Blogs.

Conclusion

Choosing the “right” database is still far away from this point, and this is not just a technical discussion. We have Firebase Database, which triggers syncs to every one of its connected frontends when the database gets an increment. But we may not need that accuracy everywhere. This is not just a technical discussion but a strategic one that can make or break the whole business. Zerodha, the largest stock market player, still moves with just 30 member teams and a single monolith PostgreSQL instance, when its opponents have 2K+ team size and 30+ microservices. Remember, the database you choose today will shape your application’s growth tomorrow. So, we need careful evaluation. And if you’re still unsure, you can always get the support of a seasoned software development company to help you out.