A Simple Way to Perform Prediction on Continuous Data Using LSTM

Long Short-Term Memory networks - usually just called "LSTM" - are a special type of Recurrent Neural Network or RNN, which can learn long-term dependencies.

They were introduced by Hochreiter & Schmidhuber and were refined and made popular by many in their work. They work incredibly well on a variety of problems and are now widely used. LSTM is specifically designed to avoid dependency problems. Remembering information for a long time is almost their default behavior, not something they have a hard time learning!

All RNNs have the form of a chain of repeating neural network units. In standard RNNs, this repeating unit will have a very simple structure, such as a tanh layer.

A step-by-step guide

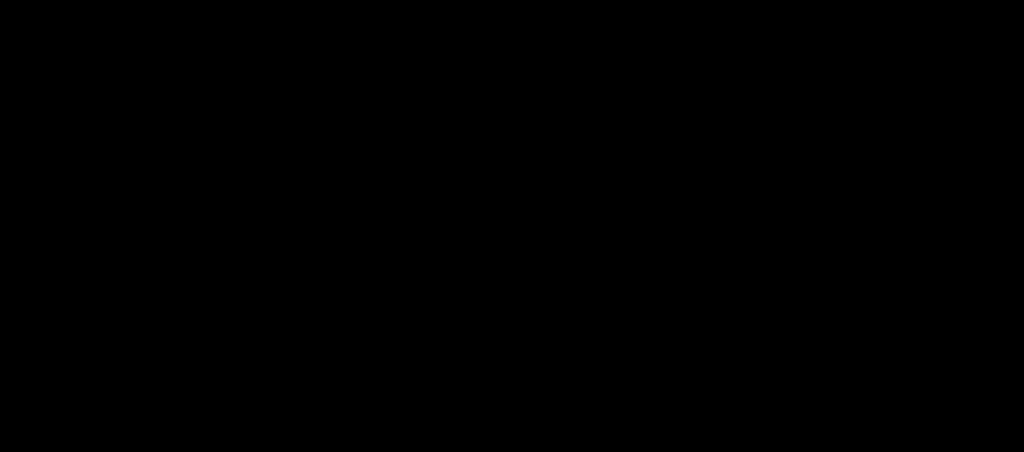

To import the continuous data, first we need to train the model. Here in the example, I have shown an empty list in which you can populate your required data.

Also, you can set the training rate as well. I recommend setting it to 80. I am also using a Min-Max scaler in this program. The Min-Max scaler is a way to normalize the input features/variables. By doing so, all features will be transformed into a range [0,1] which means that the minimum and maximum value of a feature / variable are 0 and 1, respectively.

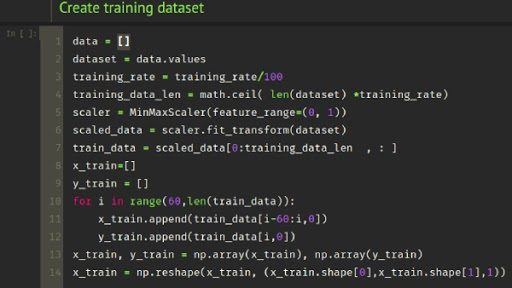

Now we can build our LSTM network. Here I have built four layers, in which the fourth one is the output layer which does the prediction part. Here we have used Adam as our optimizer. Adam is a continuation of the stochastic gradient values that have recently been observed in the application of in-depth studies in computer vision and natural language processing.

We can set the epoch count as well. It’s a good idea to visualize the learning curve as well in order to prevent overfitting of the model.

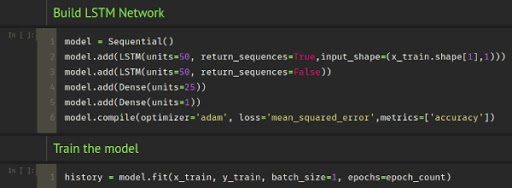

We have set our training data length on our previous step. The remaining will be assigned as test data in this step.

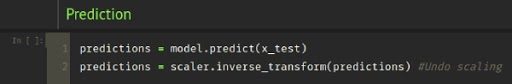

After the training part we can do our prediction on the test data to check the accuracy of our model. After the prediction it is important to rescale the values as we have used a scaler previously.

Wrapping Up

LSTMs are a big step towards what we can accomplish with RNN. It is important to think, is there another big step? One of the greatest thoughts of any researcher...Yes! There is a next step and it is attention! The idea is to allow every step of the RNN to store data for viewing on some large file.